Introduction

This research aims to analyze the prevalence of web crawlers’ full website blocking among a diverse range of websites and answer, among all, the questions of “which web crawlers (robots) are most frequently blocked from top traffic sites” and “which categories of sites have blocked each of the different common web crawlers“. The study investigates the frequency at which specific web crawlers (robots) are blocked across a dataset comprising 223,014 analyzed websites. We initially analyzed 86,151 top-traffic sites between April 15 and April 25 and redid the analysis, this time for 223,014 top-traffic sites between July 04 and Jul 30 from our initial backlog of 1 million high-traffic websites, including the sites we checked in the first analysis. More websites are analyzed in our second analysis in order to draw a more comprehensive conclusion. The analysis encompasses both gradual automated analysis of the 1 million high-traffic sites, as well as websites manually analyzed by users through our robots.txt checking tool.

Robots.txt Fun Fact:

Did you know that in our analysis of the robots.txt file of over 223,000 top-traffic sites:

- 🥇 FitnessFirst.com, with a total of 2,158 user agents, leads the highest number of user-agent declarations in its robots.txt file.

- 🥈 Op.nysed.gov follows with 1,807 user agents.

- 🥉 En.mimi.hu stands at the third position with 1,801 user agents.

Data Collection

- Website Selection: The dataset includes a comprehensive selection of websites drawn from two primary sources: an automated process that analyzes 1 million top-traffic websites and a manual analysis of websites by users of our free robots.txt testing tool.

- Web Crawlers Blocking Analysis: The analysis focuses on the robots.txt files of the selected sites, identifying instances where specific web crawlers are blocked. The evaluation ensures each website is represented only once in the dataset, utilizing the most recent analysis of the robots.txt file of each website to avoid duplication.

Data Sources

- Automated Analysis: Websites are selected for automated analysis based on their Open Page Rank. The list of sites is also obtained from DomCop’s list of the top 10 million websites, which is obtained from the Common Crawl project.

- Manual Analysis: Users contribute to the dataset by manually submitting domains for analysis via our robots.txt testing tool. These submissions broaden the scope of the dataset and incorporate a wider variety of websites. However, the number of websites submitted by the users is comparatively minuscule.

Data Processing

- Crawler Grouping: To streamline the analysis, crawlers from the same organization are grouped together. For example, various user agents associated with Semrush are consolidated into a single category, enhancing the clarity and interpretability of the results.

- Crawler Name Normalization: The crawler names extracted from robots.txt files undergo normalization to account for variations in formatting and casing. This ensures accurate data categorization and aggregation.

- Occurrence Counting: Only when a bot is entirely blocked from a site is counted as a blocking occurrence. If a bot is partially blocked (blocked from certain paths but not the entire site), it is not counted as an occurrence. This criterion ensures that the analysis accurately reflects only instances where bots are effectively excluded from accessing the site’s content.

- Deduplication: We ensured that each website was entered only once in our analysis, meaning that only the latest analysis for each website has been included in the results.

- Website Categories: In the first run of our analysis, the websites were categorized based on a curated list of 185 categories. In our second round of analysis, we reorganized this list into 18 more general categories. We assisted ChatGPT-4 to create and organize our list of categories. The final list of categories then underwent a manual human review to ensure accuracy, minimal overlap, and comprehensive coverage. This approach allowed us to generalize the categories for easier understanding of the results while retaining essential information. These categories can be seen below:

- News & Media

- Technology & Computing

- Education & Research

- Health & Wellness

- Business & Finance

- Lifestyle

- Arts & Culture

- Science & Environment

- Community & Social

- Commerce & Shopping

- Entertainment & Recreation

- Professional Services

- Government & Public Services

- Transport & Logistics

- Cultural & Historical

- Publishing & Writing

- Events & Conferences

- Technology Infrastructure

Here are some examples of sites in each category:

| Category Name | Example Sites |

|---|---|

| News & Media | bbc.co.uk, nytimes.com, techcrunch.com, theguardian.com, washingtonpost.com |

| Technology & Computing | google.com, googletagmanager.com, maps.google.com, support.google.com, github.com |

| Education & Research | arxiv.org, ted.com, sciencedirect.com, apa.org, researchgate.net |

| Health & Wellness | who.int, thelancet.com, cdc.gov, healthline.com, mayoclinic.org |

| Business & Finance | forbes.com, ft.com, bloomberg.com, cnbc.com, mckinsey.com |

| Lifestyle | potofu.me, chefsfriends.nl, socialbutterflyguy.com, loveinlateryears.com, homefixated.com |

| Arts & Culture | pinterest.com, flickr.com, commons.wikimedia.org, archive.org, canva.com |

| Science & Environment | nasa.gov, ncbi.nlm.nih.gov, nationalgeographic.com, nature.com, news.sciencemag.org |

| Community & Social | facebook.com, instagram.com, vk.com, reddit.com, discord.gg |

| Commerce & Shopping | play.google.com, amazon.com, itunes.apple.com, fiverr.com, cdn.shopify.com |

| Entertainment & Recreation | youtube.com, vimeo.com, tiktok.com, open.spotify.com, twitch.tv |

| Professional Services | linkedin.com, mayerbrown.com, jobs.microfocus.com, pwc.com, randygage.com |

| Government & Public Services | ec.europa.eu, gov.uk, fao.org, un.org, europa.eu |

| Transport & Logistics | proterra.com, uber.com, bullettrain.jp, logisticpoint.net, rdw.com.au |

| Cultural & Historical | loc.gov, web-japan.org, mnhs.org, biblearchaeology.org, cliolink.com |

| Publishing & Writing | en.wikipedia.org, medium.com, blogger.com, ameblo.jp, issuu.com |

| Events & Conferences | eventbrite.com, calendly.com, meetup.com, sxsw.com, veritas.org |

| Technology Infrastructure | fonts.googleapis.com, ajax.googleapis.com, google-analytics.com, gstatic.com, cdn.jsdelivr.net |

Analysis

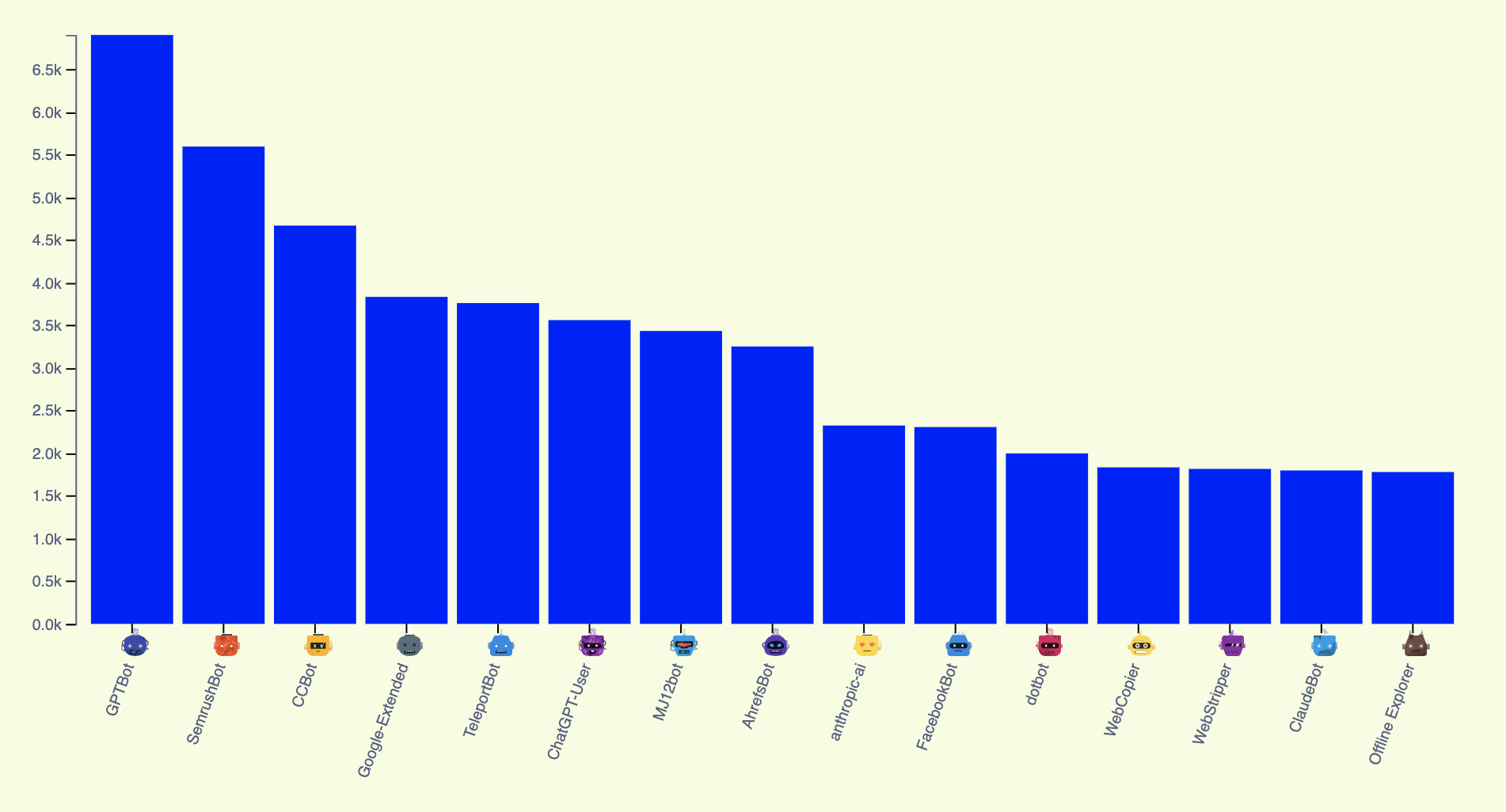

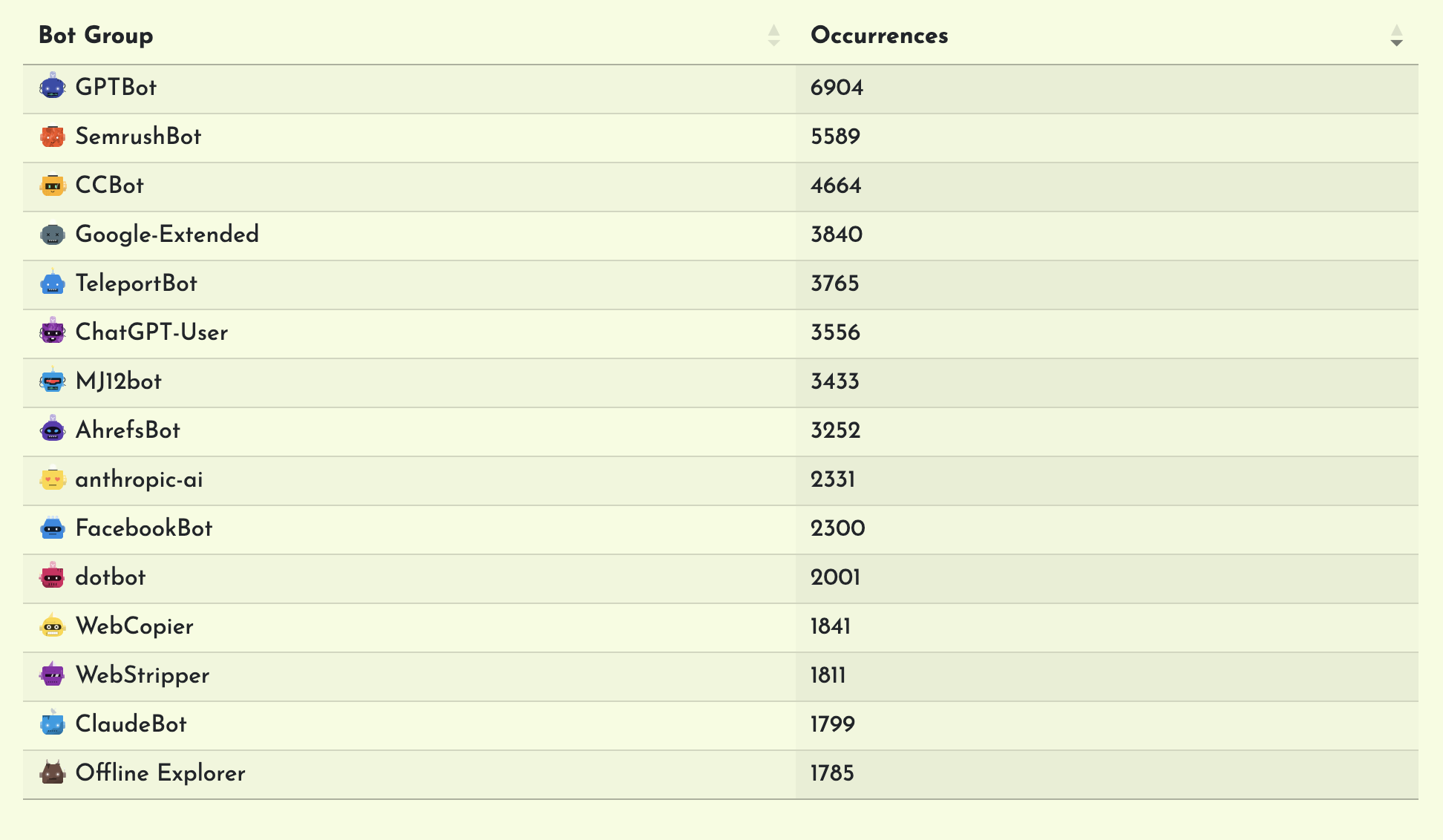

- Visualization: The research employs a bar chart to visualize the frequency of crawler exclusions across different bot categories. The Y-axis represents the number of websites blocking a specific robot, while the X-axis delineates the bot names. A stacked bar chart is also presented to show the prevalence of crawler exclusion among different categories of websites.

- Statistical Analysis: Simple quantitative analysis is conducted to identify trends and patterns in robot exclusions. The frequency of robot blocking is examined to discern prevalent practices among website owners.

- Interpretation: The findings can be interpreted to provide insights into the prevalence and significance of web crawler exclusions in the online ecosystem, especially among top-traffic websites and among different categories of sites. Implications for website owners, technical SEO strategies, and robot behavior can be derived from this data by other interested researchers.

Results

The results of the ongoing analysis are published on Nexunom’s Robots.txt Checker page. Here is a snapshot of the results at 223,014 analyzed websites. The bar chart represents the number of times we found a crawler name disallowed to access the entire site on a website’s robots.txt file (top 15 crawlers,) and the following table shows the exact number of times each of the top 15 crawlers have been blocked.

As the table above shows, GPTBot, SemrushBot, CCBot, Google-Extended and TeleportBot are among the top five most blocked crawlers in the robots.txt files of the top-traffic sites. The table below shows a more comprehensive list of the top blocked web crawlers (60 top blocked crawler list) along with the rank of each crawler in the top blocked list.

| Rank | Crawler Name | Category | Occurrence |

|---|---|---|---|

| 1 | GPTBot | AI | 6904 |

| 2 | SemrushBot | SEO | 5589 |

| 3 | CCBot | AI | 4664 |

| 4 | Google-Extended | AI | 3840 |

| 5 | TeleportBot | Web Scraping | 3765 |

| 6 | ChatGPT-User | AI | 3556 |

| 7 | MJ12bot | SEO | 3433 |

| 8 | AhrefsBot | SEO | 3252 |

| 9 | anthropic-ai | AI | 2331 |

| 10 | FacebookBot | Social Media | 2300 |

| 11 | dotbot | SEO | 2001 |

| 12 | WebCopier | Web Scraping | 1841 |

| 13 | WebStripper | Web Scraping | 1811 |

| 14 | ClaudeBot | AI | 1799 |

| 15 | Offline Explorer | Web Scraping | 1785 |

| 16 | WebZIP | Web Scraping | 1785 |

| 17 | Bytespider | Web Scraping | 1761 |

| 18 | Claude-Web | AI | 1756 |

| 19 | SiteSnagger | Web Scraping | 1755 |

| 20 | larbin | Web Scraping | 1649 |

| 21 | Amazonbot | Search Engine | 1629 |

| 22 | MSIECrawler | Web Scraping | 1616 |

| 23 | omgilibot | Web Scraping | 1604 |

| 24 | PetalBot | Search Engine | 1574 |

| 25 | Baiduspider | Search Engine | 1570 |

| 26 | blexbot | SEO | 1556 |

| 27 | omgili | Web Scraping | 1537 |

| 28 | HTTrack | Web Scraping | 1460 |

| 29 | wget | Web Scraping | 1414 |

| 30 | ZyBORG | Web Scraping | 1400 |

| 31 | PerplexityBot | AI | 1371 |

| 32 | Yandex | Search Engine | 1370 |

| 33 | WebReaper | Web Scraping | 1358 |

| 34 | NPBot | SEO | 1349 |

| 35 | Xenu | SEO | 1342 |

| 36 | ia_archiver | Web Scraping | 1332 |

| 37 | TurnitinBot | Academic | 1240 |

| 38 | grub-client | Web Scraping | 1239 |

| 39 | sitecheck.internetseer.com | SEO | 1195 |

| 40 | Fetch | Web Scraping | 1189 |

| 41 | cohere-ai | AI | 1180 |

| 42 | Zealbot | Web Scraping | 1179 |

| 43 | Download Ninja | Web Scraping | 1179 |

| 44 | linko | SEO | 1169 |

| 45 | libwww | Web Scraping | 1156 |

| 46 | Zao | Web Scraping | 1122 |

| 47 | Microsoft.URL.Control | Web Scraping | 1117 |

| 48 | UbiCrawler | Web Scraping | 1098 |

| 49 | DOC | Web Scraping | 1089 |

| 50 | magpie-crawler | Web Scraping | 1064 |

| 51 | k2spider | Web Scraping | 1051 |

| 52 | Diffbot | Web Scraping | 961 |

| 53 | DataForSeoBot | SEO | 944 |

| 54 | fast | Web Scraping | 856 |

| 55 | psbot | Web Scraping | 820 |

| 56 | Mediapartners-Google* | AdBot | 815 |

| 57 | 008 | Web Scraping | 738 |

| 58 | Scrapy | Web Scraping | 722 |

| 59 | Zeus | Web Scraping | 697 |

| 60 | WebBandit | Web Scraping | 685 |

From the table above, it is evident that 5 of the top 10 blocked web crawlers are AI-related, while SEO and Web Scraping tools account for 4 of the top 10.

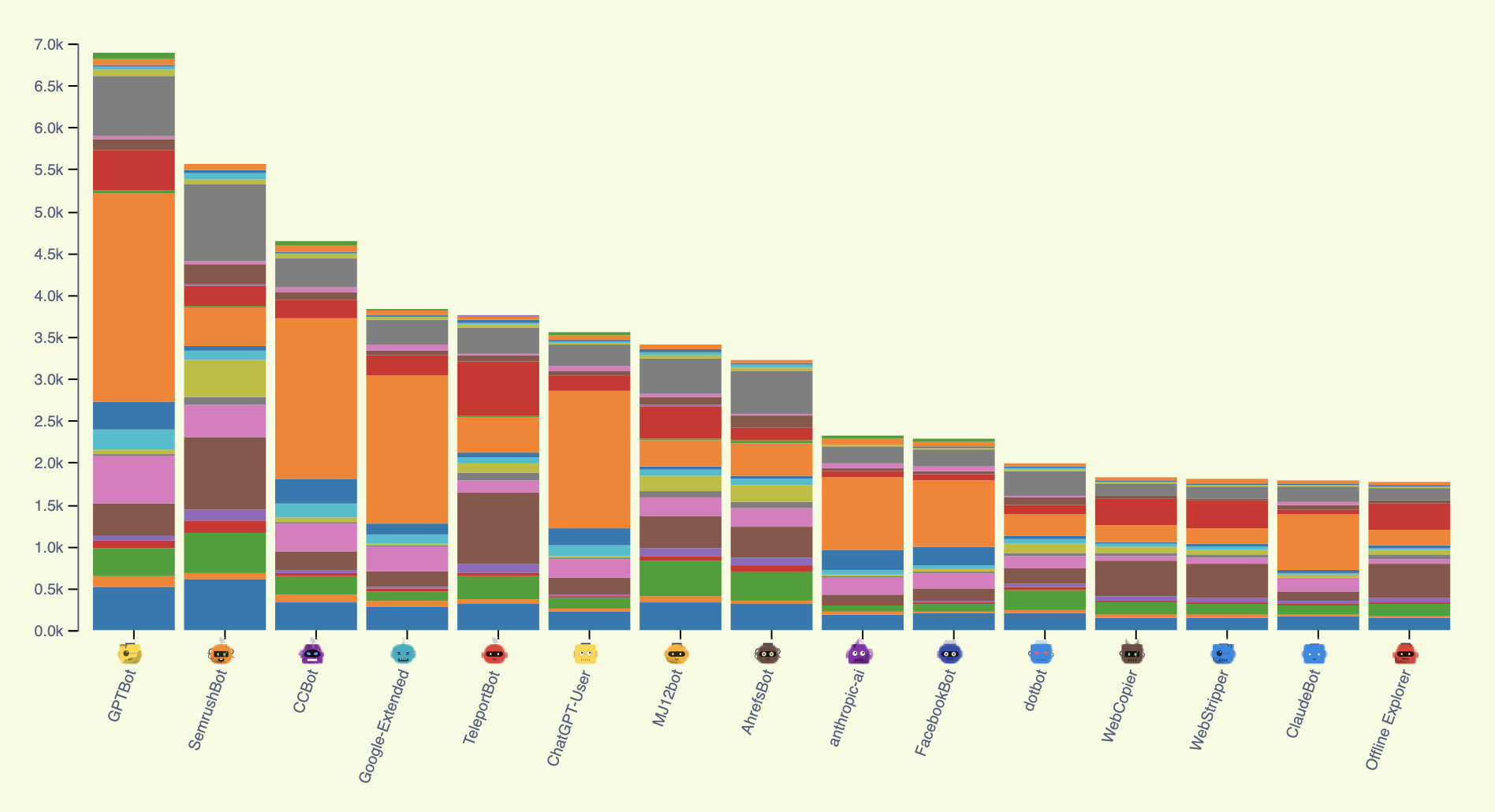

The following stacked bar chart shows which “categories of sites” have blocked a specific crawler in their robots.txt files. For example, the stacked bar for GPTBot reveals that “News & Media,” “Technology & Computing,” and “Entertainment & Recreation” are among the top categories of sites blocking ChatGPT crawler. The same trend applies to the rest of the AI Crawlers.

Note: This stacked chart, unlike the above bar chart, only represents the websites we analyzed from our dataset of 1 million top-traffic sites and does not include the sites analyzed by the users via Nexunom’s robots.txt checker, so it is a better representative of the blocking behavior of the top-traffic sites than the bar chart above.

The following table shows five of the top “site categories” blocking each of the top blocked 15 crawlers.

| Web Crawler | Site Category | Blocked Occurrence | Bot Category |

|---|---|---|---|

| AhrefsBot | Technology & Computing | 500 | SEO |

| AhrefsBot | News & Media | 393 | SEO |

| AhrefsBot | Education & Research | 366 | SEO |

| AhrefsBot | Commerce & Shopping | 341 | SEO |

| anthropic-ai | News & Media | 870 | AI |

| anthropic-ai | Lifestyle | 225 | AI |

| anthropic-ai | Technology & Computing | 207 | AI |

| anthropic-ai | Entertainment & Recreation | 197 | AI |

| anthropic-ai | Arts & Culture | 178 | AI |

| CCBot | News & Media | 1916 | AI |

| CCBot | Technology & Computing | 354 | AI |

| CCBot | Arts & Culture | 335 | AI |

| CCBot | Entertainment & Recreation | 332 | AI |

| CCBot | Lifestyle | 300 | AI |

| ChatGPT-User | News & Media | 1639 | AI |

| ChatGPT-User | Technology & Computing | 266 | AI |

| ChatGPT-User | Entertainment & Recreation | 223 | AI |

| ChatGPT-User | Lifestyle | 218 | AI |

| ChatGPT-User | Arts & Culture | 212 | AI |

| ClaudeBot | News & Media | 662 | AI |

| ClaudeBot | Arts & Culture | 170 | AI |

| ClaudeBot | Technology & Computing | 170 | AI |

| ClaudeBot | Entertainment & Recreation | 158 | AI |

| ClaudeBot | Education & Research | 115 | AI |

| dotbot | Technology & Computing | 285 | SEO |

| dotbot | News & Media | 256 | SEO |

| dotbot | Commerce & Shopping | 242 | SEO |

| dotbot | Arts & Culture | 200 | SEO |

| dotbot | Education & Research | 182 | SEO |

| FacebookBot | News & Media | 786 | Social Media |

| FacebookBot | Lifestyle | 216 | Social Media |

| FacebookBot | Arts & Culture | 204 | Social Media |

| FacebookBot | Technology & Computing | 203 | Social Media |

| FacebookBot | Entertainment & Recreation | 188 | Social Media |

| Google-Extended | News & Media | 1771 | AI |

| Google-Extended | Technology & Computing | 300 | AI |

| Google-Extended | Entertainment & Recreation | 287 | AI |

| Google-Extended | Arts & Culture | 280 | AI |

| Google-Extended | Publishing & Writing | 252 | AI |

| GPTBot | News & Media | 2480 | AI |

| GPTBot | Technology & Computing | 724 | AI |

| GPTBot | Entertainment & Recreation | 559 | AI |

| GPTBot | Arts & Culture | 507 | AI |

| GPTBot | Publishing & Writing | 492 | AI |

| MJ12bot | Technology & Computing | 438 | SEO |

| MJ12bot | Commerce & Shopping | 420 | SEO |

| MJ12bot | Publishing & Writing | 387 | SEO |

| MJ12bot | Education & Research | 384 | SEO |

| MJ12bot | Arts & Culture | 336 | SEO |

| Offline Explorer | Education & Research | 410 | Web Scraper |

| Offline Explorer | Publishing & Writing | 319 | Web Scraper |

| Offline Explorer | News & Media | 191 | Web Scraper |

| Offline Explorer | Arts & Culture | 147 | Web Scraper |

| Offline Explorer | Commerce & Shopping | 139 | Web Scraper |

| SemrushBot | Technology & Computing | 913 | SEO |

| SemrushBot | Education & Research | 874 | SEO |

| SemrushBot | Arts & Culture | 617 | SEO |

| SemrushBot | Commerce & Shopping | 484 | SEO |

| SemrushBot | News & Media | 454 | SEO |

| TeleportBot | Education & Research | 842 | Web Scraper |

| TeleportBot | Publishing & Writing | 651 | Web Scraper |

| TeleportBot | News & Media | 420 | Web Scraper |

| TeleportBot | Arts & Culture | 308 | Web Scraper |

| TeleportBot | Technology & Computing | 307 | Web Scraper |

| WebCopier | Education & Research | 418 | Web Scraper |

| WebCopier | Publishing & Writing | 322 | Web Scraper |

| WebCopier | News & Media | 189 | Web Scraper |

| WebCopier | Arts & Culture | 155 | Web Scraper |

| WebCopier | Commerce & Shopping | 144 | Web Scraper |

| WebStripper | Education & Research | 413 | Web Scraper |

| WebStripper | Publishing & Writing | 318 | Web Scraper |

| WebStripper | News & Media | 194 | Web Scraper |

| WebStripper | Arts & Culture | 151 | Web Scraper |

| WebStripper | Technology & Computing | 142 | Web Scraper |

As the table above indicates, when the web crawler is in the AI category, the most frequently blocking site category is News & Media, as seen with bots like GPTBot, ChatGPT-User, Google-Extended, anthropic-ai, and ClaudeBot. For the web crawlers with SEO categories such as SemrushBot, AhrefsBot, and MJ12bot, the top blocking site category is Technology & Computing. For the crawlers with the Web Scraper category such as Offline Explorer and WebCopier, the most commonly blocking site category is Education & Research.

Limitations and Considerations

- User Contributions: While the dataset may include domains searched by users in our robots.txt tester, the proportion of such data is considered negligible and does not significantly influence the results.

- Single Domain Representation: Each domain is counted only once in the analysis, with the latest crawl of its robots.txt file contributing to the dataset. This approach ensures fair representation and avoids skewing the results based on multiple entries for the same domain.

- Incomplete Data: While efforts are made to continuously update the dataset, it may not capture all domains or reflect instantaneous changes in robot exclusion practices across the web.

- Potential Sampling Bias: The dataset’s composition may be influenced by sampling bias inherent in the selection of domains for analysis from the top 1 million high traffic websites, potentially impacting the generalizability of findings to all the web.

Analysis of the Results

Conclusion One:

The analysis of the results indicates that certain categories of web crawlers are among the most frequently blocked across the analyzed websites. Notably, web crawlers related to artificial intelligence tools (AI crawlers), such as GPTBot, Google-Extended, and ChatGPT-User, feature prominently among the top blocked web crawlers. CCBot (Common Crawl Bot), which historically provides data for training AI tools, is also found among the top 5 blocked crawlers. Our results showed that 5 of the top 10 blocked crawlers were AI-related.

PerplexityBot, another AI crawler, is in position 31 of the top blocked crawlers, probably because it is a newer or less important one. Claude-Bot and Claude-Web are also in positions 14 and 18, respectively, showing a more prominent position in the top blocked AI-related web crawlers.

Conclusion Two:

Additionally, web crawlers associated with search engine optimization (SEO) tools, such as SemrushBot, MJ12bot (Majestic SEO web crawler), AhrefsBot, and dotblot (Moz web crawler), are prevalent in the list of frequently blocked crawlers, respectively in positions 2, 7, 8, and 11.

Conclusion Three:

Another interesting finding was that SEO-related web crawlers were blocked most by technology and computing sites, AI-related web crawlers by news and media sites, and web scraper crawlers by education and research sites.

While these findings offer insights into common practices regarding robot exclusions, it’s important to note that the results are presented for informational purposes only. It’s acknowledged that some web crawlers, particularly those related to AI tools like GPTBot or Google-Extended, may contribute valuable traffic to the sites they crawl. Therefore, while recognizing the prevalence of certain bot categories in robot exclusions, caution is advised against hasty blocking, ensuring that legitimate bot traffic is not inadvertently excluded.

The findings contribute to our understanding of robot crawling management practices and can help form strategies for handling web crawler traffic effectively. If you want to contribute to this research or have any suggestions or recommendations for us, please feel free to leave a comment below.